My main development machine used to be a 2010 MacBook Pro with a 512 GB SSD. Expensive, but way fast. Unfortunately the GPU died about a year ago so I've been using a 2011 iMac. Its also fast, if not faster than the MBP for many things but disk operations are not.

A few weeks ago on Dave Verwer's brilliant iOS Dev Weekly I found a blog post explaining how you could speed up Xcode (and AppCode) build times. This is done by moving Xcode's DerivedData folder as well as iOS Simulator Data to a RAM disk.

A RAM disk is taking a chunk of memory and treating it as a if it were a drive. Now SSD's are fast, but memory is still much faster. If you have extra memory lying around I recommend giving this a shot.

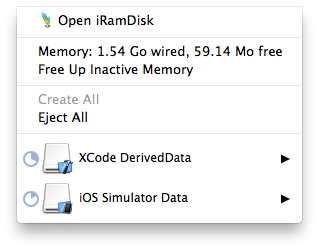

I didn't go the Terminal route but instead used iRamDisk from the Mac App Store. I was skeptical, but it definitely works.

Building and running an app I'm currently working on into a clean iOS Simulator used to take 20 seconds. Using iRamDisk it now takes 7. That's huge.

Regular builds are also much faster and very noticeable after doing a Product > Clean.

DerivedData gets a gig

The Simulator gets 512MB

The dropdown menu in the menu bar also lets you know how full each disk is and you can easily flush them via a menu option.

Menu options

If you have extra memory lying around I'd give it a shot.